Recall that the coproduct of A and B is an object A+B, together with injections i1: A -> A+B, i2: B-> A+B, with the property that whenever we have arrows f: A -> T, g: B -> T, they can be factored through A+B to give an arrow f+g, satisfying f+g . i1 = f, f+g . i2 = g.

Firstly then, I forgot to say why we called the coproduct A+B with a plus sign. Well, it's because, via the injections i1 and i2, it contains (a copy of) A and (a copy of) B. So it's a bit like a sum of A and B.

Second, I forgot to say that in the case of vector spaces, the coproduct is called the direct sum, and has its own special symbol A⊕B.

Okay, so this time I want to look at products.

Suppose we have objects A and B in some category. Then their product (if it exists) is an object A×B, together with projections p1: A×B -> A, p2: A×B -> B, with the following universal property: whenever we have arrows f: S -> A and g: S -> B, then they can be factored through A×B to give an arrow f×g: S -> A×B, such that f = p1 . f×g, g = p2 . f×g.

(The definitions of product and coproduct are dual to one another - the diagrams are the same but with the directions of the arrows reversed.)

In the category Set, the product of sets A and B is their Cartesian product A×B. In the category Hask, of course, the product of types a and b is written (a,b), p1 is called fst, and p2 is called snd.

We can then define the required product map as:

(f .*. g) x = (f x, g x)Then it should be clear that fst . (f .*. g) = f, and snd . (f .*. g) = g, as required.

Okay, so what do products look like in the category of lists (free monoids)? (Recall that in this category, the arrows are required to be monoid homomorphisms, meaning that f [] = [] and f (xs++ys) = f xs ++ f ys. It follows that we can express f = concatMap f', for some f'.)

Well, the obvious thing to try as the product is the Cartesian product ([a],[b]). Is the Cartesian product of two monoids a monoid? Well yes it is actually. We could give it a monoid structure as follows:

(as1, bs1) ++ (as2, bs2) = (as1++as2, bs1++bs2)

[] = ([],[])This isn't valid Haskell code of course. It's just my shorthand way of expressing the following code from Data.Monoid:

instance Monoid [a] where

mempty = []

mappend = (++)

instance (Monoid a, Monoid b) => Monoid (a,b) where

mempty = (mempty, mempty)

(a1,b1) `mappend` (a2,b2) =

(a1 `mappend` a2, b1 `mappend` b2)

From these two instances, it follows that ([a],[b]) is a monoid, with monoid operations equivalent to those I gave above. (In particular, it's clear that the construction satisfies the monoid laws: associativity of ++, identity of [].)

But it feels like there's something unsatisfactory about this. Wouldn't it be better for the product of list types [a] and [b] to be another list type [x], for some type x?

Our first thought might be to try [(a.b)]. The product map would then need to be something like \ss -> zip (f ss) (g ss). However, we quickly see that this won't work: what if f ss and g ss are not the same length.

What else might work? Well, if you think of ([a],[b]) as some as on the left and some bs on the right, then the answer should spring to mind. Let's try [Either a b]. We can then define:

p1 xs = [x | Left x <- xs] -- this is doing a filter and a map at the same time

p2 xs = [x | Right x <- xs]with the product map

f×g = \ss -> map Left (f ss) ++ map Right (g ss)Then it is clear that p1 . f×g = f and p2 . f×g = g, as required.

What is the relationship between ([a],[b]) and [Either a b]? Well, ([a],[b]) looks a bit like a subset of [Either a b], via the injection i (as,bs) = map Left as ++ map Right bs. However, this injection is not a monoid homomorphism, since

i ([],[b1] ++ ([a1],[]) /= i ([],[b1]) ++ i ([a1],[])So ([a],[b]) is not a submonoid of [Either a b].

On the other hand, there is a projection p :: [Either a b] -> ([a],[b]), p xs = (p1 xs, p2 xs). This is a monoid homomorphism, so ([a],[b]) is a quotient of [Either a b].

So which is the right answer? Which of ([a],[b]) and [Either a b] is really the product of [a] and [b]?

Well, it depends. It depends which category we think we're working in. If we're working in the category of monoids, then it is ([a],[b]). However, if we're working in the category of free monoids (lists), then it is [Either a b].

You see, ([a],[b]) is not a free monoid. What does this mean? Well, it basically means it's not a list. But how do we know that ([a],[b]) isn't equivalent to some list? And anyway, what does "free" mean in free monoid?

"Free" is a concept that can be applied to many algebraic theories, not just monoids. There is more than one way to define it.

An algebraic theory defines various constants and operations. In the case of monoids, there is one constant - which we may variously call [] or mempty or 0 or 1 - and one operation - ++ or mappend or + or *. Now, a given monoid may turn out to be generated by some subset of its elements - meaning that every element of the monoid can be equated with some expression in the generators, constants, and operations.

For example, the monoid of natural numbers is generated by the prime numbers: every natural number is equal to some expression in 1, *, and the prime numbers. The monoid [x] is generated by the singleton lists: every element of [x] is equal to some expression in [], ++, and the singleton lists. By a slight abuse of notation, we can say that [x] is generated by x - by identifying the singleton lists with the image of x under \x -> [x].

Then we say that a monoid is free on its generators if there are no relations among its elements other than those implied by the monoid laws. That is, no two expressions in the generators, constants, and operators are equal to one another, unless it is as a consequence of the monoid laws.

For example, suppose it happens that

(x ++ y) ++ z = x ++ (y ++ z)That's okay, because it follows from the monoid laws. On the other hand, suppose that

x ++ y = y ++ xThis does not follow from the monoid laws (unless x = [] or y = []), so is a non-trivial relation. (Thus the natural numbers under multiplication are not a free monoid - because they're commutative.)

What about our type ([a],[b]) then? Well consider the following relations:

(as,[]) ++ ([],bs) = ([],bs) ++ (as,[])

(as1++as2,bs1) ++ ([],bs2) = (as1,bs1) ++ (as2,bs2) = (as1,[]) ++ (as2,bs1++bs2)We have commutativity relations between the [a] and [b] parts of the product. Crucially, these relations are not implied by the monoid structure alone. So intuitively, we can see that ([a],[b]) is not free.

The "no relations" definition of free is the algebraic way to think about it. However, there is also a category theory way to define it. The basic idea is that if a monoid is free on its generators, then given any other monoid with the same generators, we can construct it as a homomorphic image of our free monoid, by "adding" the appropriate relations.

In order to express this properly, we're going to need to use some category theory, and specifically the concept of the forgetful functor. Recall that given any algebraic category, such as Mon (monoids), there is a forgetful functor U: Mon -> Set, which consists in simply forgetting the algebraic structure. U takes objects to their underlying sets, and arrows to the underlying functions. In Haskell, U: Mon -> Hask consists in forgetting that our objects (types) are monoids, and forgetting that our arrows (functions) are monoid homomorphisms. (As a consequence, U is syntactically invisible in Haskell. However, to properly understand the definition of free, we have to remember that it's there.)

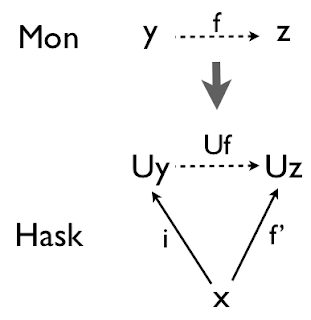

Then, given an object x (the generators), a free monoid on x is a monoid y, together with a function i: x -> U y, such that whenever we have an object z in Mon and a function f': x -> U z, then we can lift it to a unique arrow f: y -> z, such that f' = Uf . i.

When we say that lists are free monoids, we mean specifically that (the type) [x] is free on (the type) x, via the function i = \x -> [x] (on values). This is free, because given any other monoid z, and function f' :: x -> z, then we can lift to a monoid homomorphism f :: [x] -> z, with f' = f . i. How? Well, the basic idea is to use concatMap. The type of concatMap is:

concatMap :: (a -> [b]) -> [a] -> [b]So it's doing the lifting we want. However this isn't quite right, because this assumes that the target monoid z is a list. So we need this slight variant:

mconcatmap :: (Monoid z) => (x -> z) -> [x] -> z

mconcatmap f xs = mconcat (map f xs)If we set f = mconcatmap f', then we will have

(f . i) x

= f (i x)

= f [x]

= mconcatmap f' [x]

= mconcat (map f' [x])

= mconcat [f' x]

= foldr mappend mempty [f' x] -- definition of mconcat

= mappend mempty (f' x) -- definition of foldr

= f' x -- identity of memptyNow, what would it mean for ([a],[b]) to be free? Well, first, what is it going to be free on? To be free on a and b is the same as being free on Either a b (the disjoint union of a and b). Then our function i is going to be

i (Left a) = ([a],[])

i (Right b) = ([],[b])Then for ([a],[b]) to be free would mean that whenever we have a function f' :: Either a b -> z, with z a monoid, then we can lift it to a monoid homomorphism f : ([a],[b]) -> z, such that f' = f . i.

So can we?

Well, what if our target monoid z doesn't satisfy the a-b commutativity relations that we saw. That is, what if:

f' a1 `mappend` f' b1 /= f' b1 `mappend` f' a1 -- (A)That would be a problem.

We are required to find an f such that f' = f . i.

We know that i a1 = ([a1],[]), i b1 = ([],[b1]). So we know that i a1 `mappend` i b1 = i b1 `mappend` i a1.

f is required to be a monoid homomorphism, so by definition:

f (i a1 `mappend` i b1) = f (i a1) `mappend` f (i b1)

f (i b1 `mappend` i a1) = f (i b1) `mappend` f (i a1)But then since the two left hand sides are equal, then so are the two right hand sides, giving:

f (i a1) `mappend` f (i b1) = f (i b1) `mappend` f (i a1) -- (B)But now we have a contradiction between (A) and (B), since f' = f . i.

So for a concrete counterexample, showing that ([a],[b]) is not free, all we need is a monoid z in which the a-b commutativity relations don't hold. Well that's easy: [Either a b]. Just take f' :: Either a b -> [Either a b], f' = \x -> [x]. Now try to find an f :: ([a],[b]) -> [Either a b], with f' = f . i.

The obvious f is f (as,bs) = map Left as ++ map Right bs.

But the problem is that this f isn't a monoid homomorphism:

f ( ([],[b1]) `mappend` ([a1],[]) ) /= f ([],[b1]) `mappend` f ([a1],[])Notice the connection between the two definitions of free. It was because ([a],[b]) had non-trivial relations that we couldn't lift a function to a monoid homomorphism in some cases. The cases where we couldn't were where the target monoid z didn't satisfy the relations.

Okay, so sorry, that got a bit technical. To summarise, the product of [a], [b] in the category of lists / free monoids is [Either a b].

What about vector spaces? What is the product of Vect k a and Vect k b?

Well, similarly to lists, we can make (Vect k a, Vect k b) into a vector space, by defining

0 = (0,0)

(a1,b1) + (a2,b2) = (a1+a2,b1+b2)

k(a,b) = (ka,kb)

Exercise: Show that with these definitions, fst, snd and f .*. g are vector space morphisms (linear maps).

Alternatively, Vect k (Either a b) is of course a vector space. We can define:

p1 = linear p1' where

p1' (Left a) = return a

p1' (Right b) = zero

p2 = linear p2' where

p2' (Left a) = zero

p2' (Right b) = return b

prodf f g = linear fg' where

fg' b = fmap Left (f (return b)) <+> fmap Right (g (return b))

In this case p1, p2, f×g are vector space morphisms by definition, since they were constructed using "linear". How do we know that they satisfy the product property? Well, this looks like a job for QuickCheck. The following code builds on the code we developed last time:

prop_Product (f',g',x) =

f x == (p1 . fg) x &&

g x == (p2 . fg) x

where f = linfun f'

g = linfun g'

fg = prodf f g

newtype SBasis = S Int deriving (Eq,Ord,Show,Arbitrary)

prop_ProductQn (f,g,x) = prop_Product (f,g,x)

where types = (f,g,x) :: (LinFun Q SBasis ABasis, LinFun Q SBasis BBasis, Vect Q SBasis)

> quickCheck prop_ProductQn

+++ OK, passed 100 tests.

As we did with lists, we can ask again, which is the correct definition of product, (Vect k a, Vect k b), or Vect k (Either a b)?

Well, in this case it turns out that they are equivalent to one another, via the mutually inverse isomorphisms

\(va,vb) -> fmap Left va <+> fmap Right vb

\v -> (p1 v, p2 v)Unlike in the list case, these are both vector space morphisms (linear functions).

Why the difference? Why does it work out for vector spaces whereas it didn't for lists? Well, I think it's basically because vector spaces are commutative.

(It is also the case that vector spaces are always free on a basis. So since we have an obvious bijection between the bases of (Vect k a, Vect k b) and Vect k (Either a b), then we must have an isomorphism between the vector spaces.)

Now, we're left with a little puzzle. We have found that both the product and the coproduct of two vector spaces is Vect k (Either a b). So we still haven't figured out what Vect k (a,b) represents.

I'd just like to take the time to say that I really enjoy reading your blog. In fact, not just reading but learning. So, please at no cost stop doing what you are doing... Quality like this doesn't come easy, or free :-)

ReplyDelete